In the world of machine learning and data preprocessing, the LabelEncoder from Scikit-Learn’s preprocessing module plays a crucial role. It’s a simple yet powerful tool that helps to transform categorical labels into numerical representations, making it easier for machine learning algorithms to process the data.

The LabelEncoder is one of the Scikit-Learn Encoders used for handling categorical data labels effectively.

What is LabelEncoder?

LabelEncoder is a preprocessing technique that converts categorical labels into numerical values. It assigns a unique integer to each unique category in the dataset, making it more suitable for machine learning algorithms.

Why is Label Encoding Important?

Machine learning algorithms work with numerical data, and many algorithms cannot directly handle categorical labels. Label encoding helps in transforming these labels into a format that algorithms can process.

How Does LabelEncoder Work?

The process is straightforward:

- Each unique category is assigned a unique integer.

- For example, if you have labels ‘red’, ‘green’, and ‘blue’, LabelEncoder might assign them 0, 1, and 2 respectively.

When to Use LabelEncoder?

LabelEncoder is useful when you have categorical labels that can be ordered or have a meaningful numerical representation.

Limitations of LabelEncoder

While LabelEncoder is handy, it has limitations:

- It assumes an ordinal relationship between labels, which might not always be the case.

- Some machine learning algorithms might misinterpret the encoded values as having mathematical significance.

Alternatives to LabelEncoder

If the categorical labels lack an ordinal relationship, you might consider using techniques like One-Hot Encoding or Target Encoding.

Python Code Examples

Example 1: Using LabelEncoder on Categorical Labels

from sklearn.preprocessing import LabelEncoder

#Create a LabelEncoder instance

encoder = LabelEncoder()

#Categorical labels

labels = ['cat', 'dog', 'bird', 'dog', 'cat']

#Encode labels

encoded_labels = encoder.fit_transform(labels)

print('Labels:\n',labels)

print('Encoded:\n',encoded_labels)

Example 2: Inverse Transform with LabelEncoder

from sklearn.preprocessing import LabelEncoder

#Create a LabelEncoder instance

encoder = LabelEncoder()

#Categorical labels

labels = ['cat', 'dog', 'bird', 'dog', 'cat']

#Encode labels

encoded_labels = encoder.fit_transform(labels)

#Inverse transform to get original labels

original_labels = encoder.inverse_transform(encoded_labels)

print('Labels:\n',labels)

print('Encoded:\n',encoded_labels)

print('Original Labels:\n',original_labels)

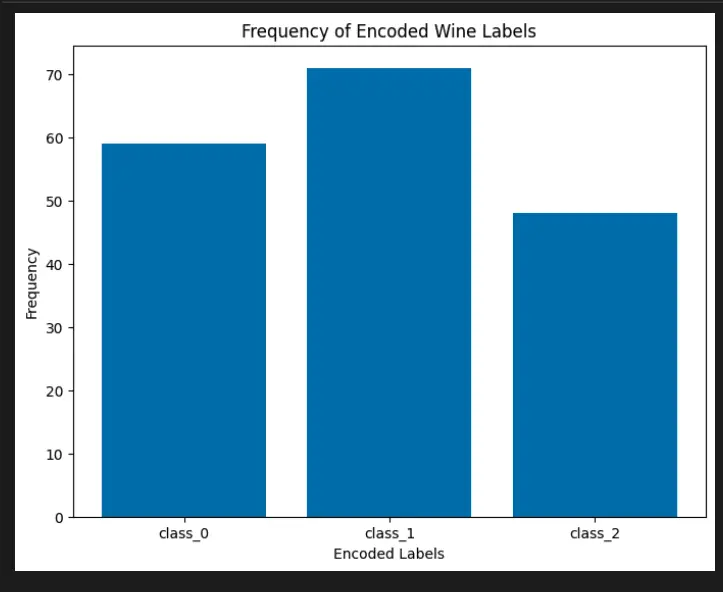

Visualize Scikit-Learn Preprocessing LabelEncoder with Python

To better understand how the LabelEncoder works, let’s visualize its effects on a built-in Scikit-learn dataset using the Matplotlib library.

import matplotlib.pyplot as plt

import numpy as np

from sklearn.preprocessing import LabelEncoder

from sklearn.datasets import load_wine

# Load the Wine dataset

wine = load_wine()

target_names = wine.target_names

# Create a LabelEncoder instance

encoder = LabelEncoder()

# Encode the target labels

encoded_labels = encoder.fit_transform(wine.target)

# Count the frequency of each encoded label

label_counts = np.bincount(encoded_labels)

# Create a bar plot to visualize the encoded label frequencies

plt.figure(figsize=(8, 6))

plt.bar(target_names, label_counts)

plt.xlabel('Encoded Labels')

plt.ylabel('Frequency')

plt.title('Frequency of Encoded Wine Labels')

plt.show()

Sklearn Encoders

Scikit-Learn provides three distinct encoders for handling categorical data: LabelEncoder, OneHotEncoder, and OrdinalEncoder.

LabelEncoderconverts categorical labels into sequential integer values, often used for encoding target variables in classification.OneHotEncodertransforms categorical features into a binary matrix, representing the presence or absence of each category. This prevents biases due to category relationships.OrdinalEncoderencodes ordinal categorical data by assigning numerical values based on order, maintaining relationships between categories. These encoders play vital roles in transforming diverse categorical data types into formats compatible with various machine learning algorithms.

| Encoder | Advantages | Disadvantages | Best Use Case |

|---|---|---|---|

| LabelEncoder | Simple and efficient encoding. Useful for target variables. Preserves natural order. | Doesn’t create additional features. Not suitable for features without order. | Classification tasks where labels have a meaningful order. |

| OneHotEncoder | Prevents bias due to category relationships. Useful for nominal categorical features. Compatible with various algorithms. | Creates high-dimensional data. Potential multicollinearity issues. | Machine learning algorithms requiring numeric input, especially for nominal data. |

| OrdinalEncoder | Maintains ordinal relationships. Handles meaningful order. Useful for features with inherent hierarchy. | May introduce unintended relationships. Not suitable for nominal data. | Features with clear ordinal rankings, like education levels or ratings. |

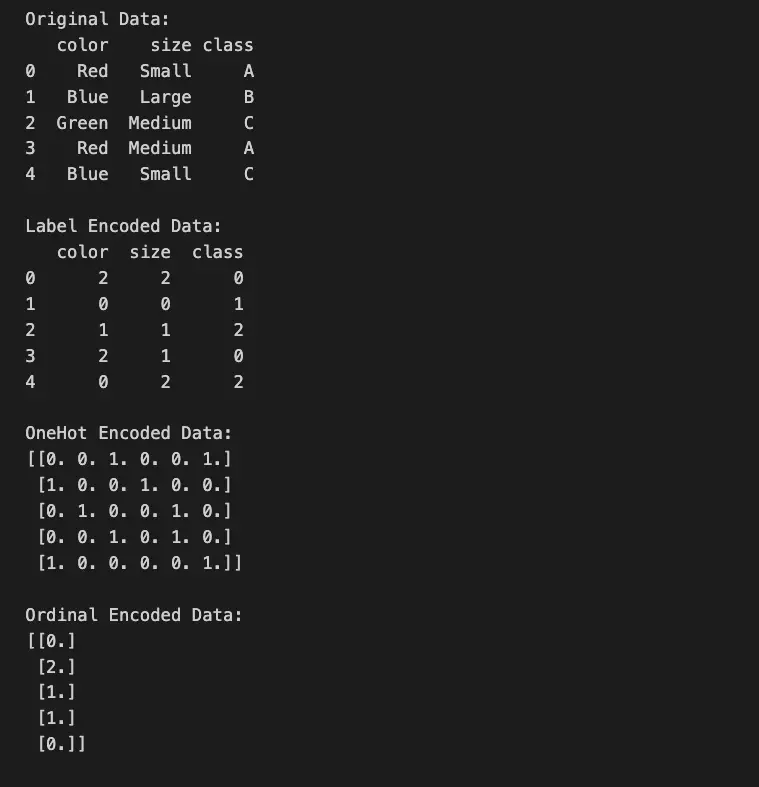

Python Example

from sklearn.preprocessing import LabelEncoder, OneHotEncoder, OrdinalEncoder

import pandas as pd

# Create a sample dataset

data = pd.DataFrame({

'color': ['Red', 'Blue', 'Green', 'Red', 'Blue'],

'size': ['Small', 'Large', 'Medium', 'Medium', 'Small'],

'class': ['A', 'B', 'C', 'A', 'C']

})

# Using LabelEncoder

label_encoder = LabelEncoder()

data_label_encoded = data.copy()

for column in data.columns:

data_label_encoded[column] = label_encoder.fit_transform(data[column])

# Using OneHotEncoder

onehot_encoder = OneHotEncoder()

data_onehot_encoded = onehot_encoder.fit_transform(data[['color', 'size']]).toarray()

# Using OrdinalEncoder

ordinal_encoder = OrdinalEncoder(categories=[['Small', 'Medium', 'Large']])

data_ordinal_encoded = ordinal_encoder.fit_transform(data[['size']])

print("Original Data:")

print(data)

print("\nLabel Encoded Data:")

print(data_label_encoded)

print("\nOneHot Encoded Data:")

print(data_onehot_encoded)

print("\nOrdinal Encoded Data:")

print(data_ordinal_encoded)

To learn more, read our blog post on Scikit-learn encoders.

Important Concepts in Scikit-Learn Preprocessing LabelEncoder

- Data Labeling

- Categorical Data

- Encoding

- Numerical Representation

- Label Mapping

To Know Before You Learn Scikit-Learn Preprocessing LabelEncoder?

- Basics of Machine Learning

- Understanding Categorical Data

- Python Programming

- Scikit-Learn Library

- Data Preprocessing Concepts

What’s Next?

- One-Hot Encoding

- Ordinal Encoding

- Feature Scaling

- Handling Missing Values

- Advanced Data Preprocessing Techniques

Relevant Entities

| Entity | Properties |

|---|---|

| LabelEncoder | Transforms categorical labels to numerical values |

| Categorical Labels | Non-numerical labels used to represent categories |

| Numerical Values | Encoded representations of categorical labels |

| Machine Learning Algorithms | Algorithms that process numerical data |

| One-Hot Encoding | Technique to convert categorical variables into binary vectors |

| Target Encoding | Technique that uses the target variable to encode categorical features |

Conclusion

The LabelEncoder is a simple but important preprocessing technique in machine learning. It bridges the gap between categorical labels and numerical algorithms, enabling seamless data processing.