In this tutorial with will learn how to use Scikit-learn’s preprocessing.PolynomialFeatures with some Python examples.

What Are Polynomial Features in Machine Learning?

PolynomialFeatures is a preprocessing technique that generates polynomial combinations of features, enabling algorithms to capture nonlinear relationships in the data.

How Does PolynomialFeatures Work?

PolynomialFeatures takes the original feature matrix and transforms it into a new matrix containing all possible polynomial combinations of the features up to a specified degree.

Why Use Polynomial Features?

Using PolynomialFeatures can help capture complex relationships between features that linear models might miss.

Benefits of Polynomial Features

- Allows models to represent non-linear data.

- Enhances feature space to capture higher-order interactions.

- Useful when simple linear models are insufficient.

Considerations

Keep in mind:

- Higher degrees increase feature dimensionality and model complexity.

- May lead to overfitting with excessive degrees.

- Feature scaling becomes crucial for effective polynomial transformations.

Use Cases

PolynomialFeatures can be valuable in:

- Regression problems with non-linear data.

- Feature engineering to create new interaction features.

- Exploring relationships in data visualization.

How to Use PolynomialFeatures

- Import

PolynomialFeaturesfrom Scikit-Learn. - Create an instance of

PolynomialFeatureswith desired parameters. - Apply

fit_transformto your feature matrix to generate polynomial features.

Python Code Examples

Using PolynomialFeatures

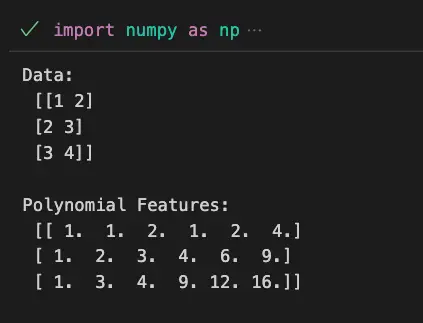

import numpy as np

from sklearn.preprocessing import PolynomialFeatures

# Create a simple dataset

X = np.array([[1, 2], [2, 3], [3, 4]])

# Create PolynomialFeatures instance with degree 2

poly = PolynomialFeatures(degree=2)

# Transform the feature matrix

X_poly = poly.fit_transform(X)

print('Data:\n',X,'\n')

print('Polynomial Features:\n',X_poly)

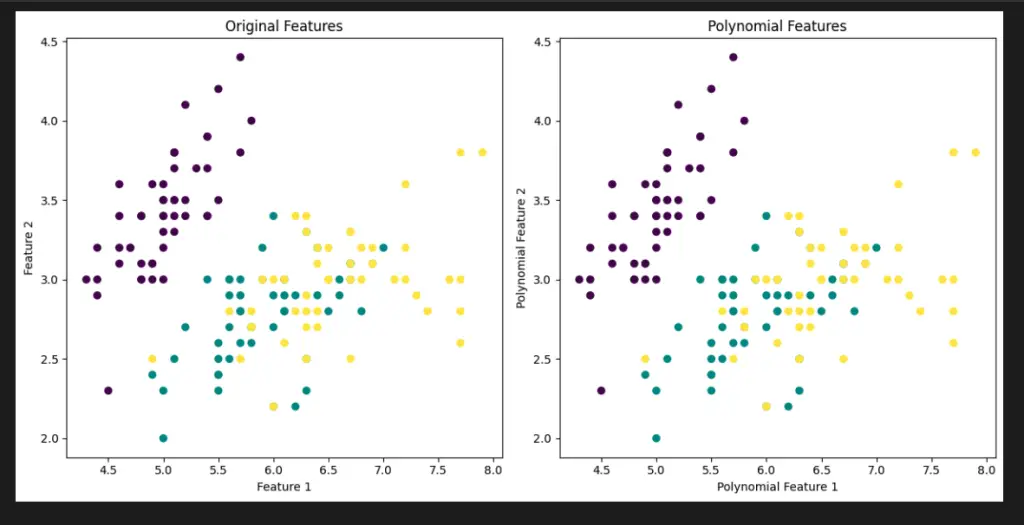

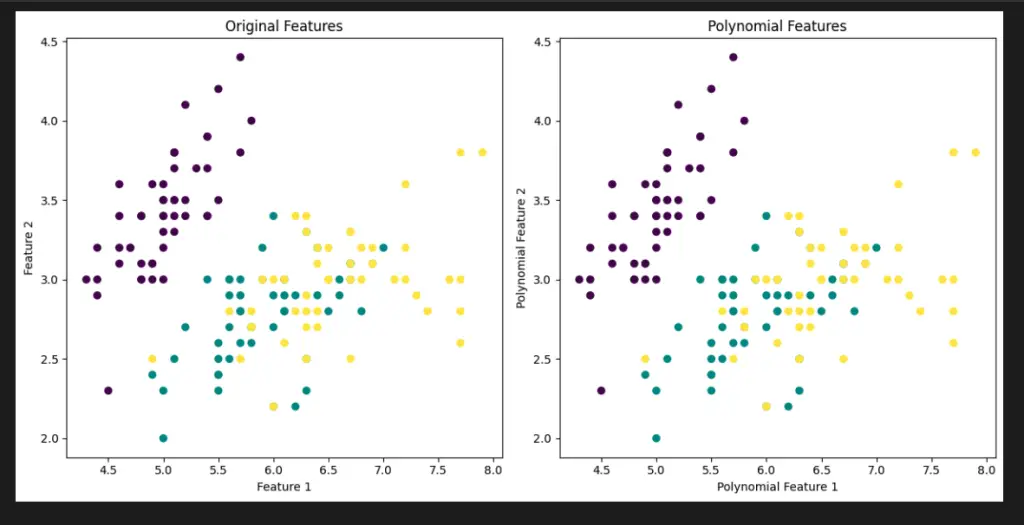

Visualize Scikit-Learn Preprocessing PolynomialFeatures with Python

Let’s use the well-known Iris dataset from Scikit-Learn to visualize the effects of PolynomialFeatures on feature interactions:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.preprocessing import PolynomialFeatures

# Load the Iris dataset

iris = load_iris()

X = iris.data[:, :2] # We'll use only the first two features

y = iris.target

# Apply PolynomialFeatures

poly = PolynomialFeatures(degree=2)

X_poly = poly.fit_transform(X)

# Create a scatter plot of the original features

plt.figure(figsize=(12, 6))

plt.subplot(1, 2, 1)

plt.scatter(X[:, 0], X[:, 1], c=y, cmap='viridis')

plt.title("Original Features")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

# Create a scatter plot of the polynomial features

plt.subplot(1, 2, 2)

plt.scatter(X_poly[:, 1], X_poly[:, 2], c=y, cmap='viridis')

plt.title("Polynomial Features")

plt.xlabel("Polynomial Feature 1")

plt.ylabel("Polynomial Feature 2")

plt.tight_layout()

plt.show()

In the left plot, you can see the original features, and in the right plot, you can see the effect of applying PolynomialFeatures to create new polynomial features. This visualization showcases how PolynomialFeatures can capture complex relationships in data.

Important Concepts in Scikit-Learn Preprocessing PolynomialFeatures

- Feature Engineering

- Polynomial Degree

- Non-linear Relationships

- Overfitting and Model Complexity

- Feature Transformation

To Know Before You Learn Scikit-Learn Preprocessing PolynomialFeatures

- Understanding of linear and non-linear relationships in data.

- Familiarity with feature engineering and its importance in improving model performance.

- Basic knowledge of polynomial functions and their characteristics.

- Understanding of overfitting and the trade-off between model complexity and generalization.

- Familiarity with Scikit-Learn library and its preprocessing capabilities.

What’s Next?

- Feature Selection Techniques

- Regularization Methods

- Advanced Polynomial Features

- Model Training and Evaluation

- Hyperparameter Tuning

Relevant Entities

| Entity | Properties |

|---|---|

| PolynomialFeatures | Generates polynomial combinations of features. Transforms data to capture non-linear relationships. Useful for enhancing feature space. |

| Feature Matrix | Original dataset containing features. Used as input to PolynomialFeatures. |

| Degree | Parameter specifying the highest degree of polynomial combinations. Determines the complexity of polynomial transformations. |

| Non-linear Relationships | Complex connections between features. Not easily captured by linear models. Addressed using PolynomialFeatures. |

| Feature Engineering | Process of creating new features. Used to improve model performance. PolynomialFeatures aids in feature engineering. |

Sources

Here are some popular pages that provide information on Scikit-Learn Preprocessing PolynomialFeatures:

scikit-learn.org/stable/modules/generated/sklearn.preprocessing.PolynomialFeatures.html" target="_blank" rel="noreferrer noopener">Scikit-Learn Documentation – PolynomialFeatures

scikit-learn/" target="_blank" rel="noreferrer noopener">Analytics Vidhya – Create Polynomial Features using Scikit-Learn

Machine Learning Mastery – Polynomial Features Transforms for Machine Learning

Towards Data Science – Polynomial Regression

scikit-learn-3bb66b53a94b" target="_blank" rel="noreferrer noopener">Medium – Feature Engineering: Polynomial Features with Scikit-Learn

Conclusion

PolynomialFeatures is a powerful tool to capture non-linear relationships in data. By expanding the feature space to include polynomial combinations, you can improve model accuracy and uncover complex patterns that might be missed by linear models.