Welcome to this article that explores the concept of Scikit-Learn preprocessing binarizers. Binarization, or binary conversion, is an important technique in data preprocessing, and Scikit-Learn offers tools to help you achieve this efficiently.

What Are Scikit-Learn Preprocessing Binarizers?

Scikit-Learn preprocessing binarizers are tools used to transform numerical features into binary values based on a specified threshold.

Why Use Binarization in Preprocessing?

Binarization simplifies data by converting numerical values into binary representations, which can be useful for certain machine learning algorithms.

How Do Binarizers Work?

Binarizers convert numerical values above a threshold to 1 and values below or equal to the threshold to 0.

When to Use Binarizers?

Scikit-Learn preprocessing binarizers are useful in scenarios where you want to focus on specific aspects of a feature based on a threshold.

Binarizer Comparison: Advantages, Disadvantages, and Best Use Cases

| Binarizer | Advantages | Disadvantages | Best Use Cases |

|---|---|---|---|

| Binarizer | Transforms numerical features into binary values. Simple and easy to use. | Information loss due to simplification. May not capture nuanced relationships in data. | Creating binary features for specific attributes. Highlighting specific aspects of a feature. |

Python Examples With Different Sklearn Binarizers

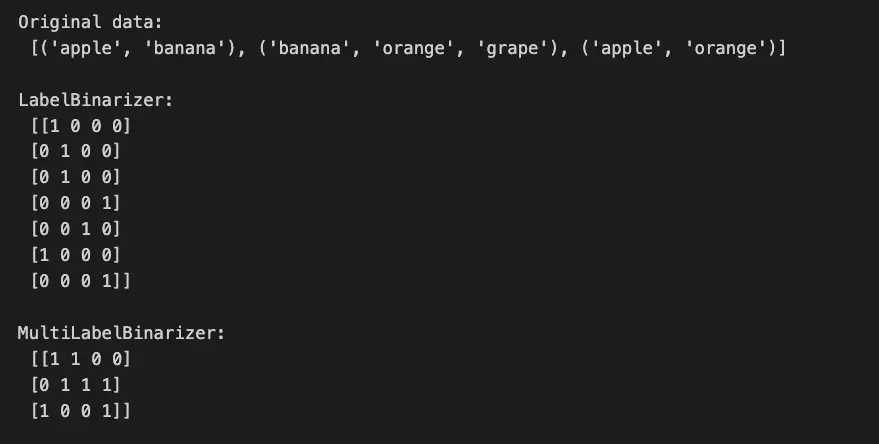

import numpy as np

from sklearn.preprocessing import LabelBinarizer, MultiLabelBinarizer

# Create the dataset

data = [('apple', 'banana'), ('banana', 'orange', 'grape'), ('apple', 'orange')]

print(f"Original data:\n {data}\n")

# Apply LabelBinarizer

label_binarizer = LabelBinarizer()

label_binarized_data = label_binarizer.fit_transform([label for item in data for label in item])

print("LabelBinarizer:\n", label_binarized_data)

# Apply MultiLabelBinarizer

multi_label_binarizer = MultiLabelBinarizer()

multi_label_binarized_data = multi_label_binarizer.fit_transform(data)

print("\nMultiLabelBinarizer:\n", multi_label_binarized_data)

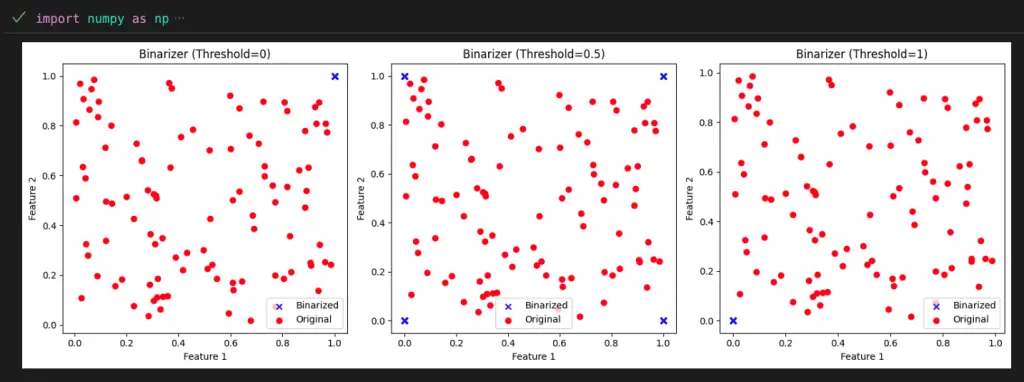

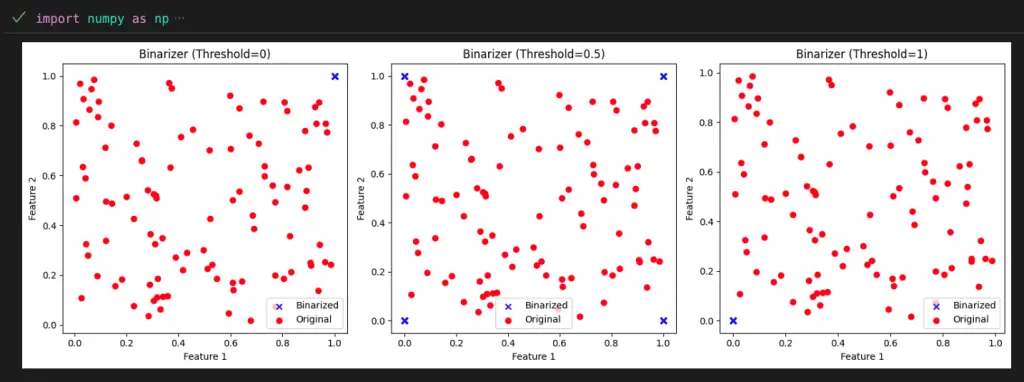

Binarizers Thresholds

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import Binarizer

# Generate random data

np.random.seed(42)

data = np.random.rand(100, 2)

# Apply different binarizers

binarizers = {

'Binarizer (Threshold=0)': Binarizer(threshold=0),

'Binarizer (Threshold=0.5)': Binarizer(threshold=0.5),

'Binarizer (Threshold=1)': Binarizer(threshold=1)

}

binarized_data = {binarizer_name: binarizer.transform(data) for binarizer_name, binarizer in binarizers.items()}

# Create subplots to compare the effects of different binarizers

fig, axes = plt.subplots(1, len(binarizers), figsize=(15, 5))

for ax, (binarizer_name, binarized_features) in zip(axes, binarized_data.items()):

ax.scatter(binarized_features[:, 0], binarized_features[:, 1], c='blue', marker='x', label='Binarized')

ax.scatter(data[:, 0], data[:, 1], c='red', marker='o', label='Original')

ax.set_title(binarizer_name)

ax.set_xlabel("Feature 1")

ax.set_ylabel("Feature 2")

ax.legend()

plt.tight_layout()

plt.show()

Benefits of Binarization

Binarization offers the following advantages:

- Simplifies data, reducing complexity.

- Can be helpful for algorithms that rely on binary input.

- Highlights specific features or relationships.

Considerations and Impact on Algorithms

Using binarization can have implications for machine learning algorithms:

- May lead to information loss due to simplification.

- Some algorithms might perform better with binary inputs.

- Choose the threshold carefully to retain relevant information.

Important Concepts in Scikit-Learn Binarizers

- Binarization of Data

- Thresholding

- Feature Transformation

- Information Loss

- Categorical Features

- Numerical Binarization

- Domain Knowledge

- Feature Interpretation

- Impact on Algorithms

To Know Before You Learn Scikit-Learn Binarizers?

- Understanding of Data Transformation

- Basic Python Programming Skills

- Familiarity with Scikit-Learn Library

- Knowledge of Data Preprocessing

- Concept of Feature Engineering

- Awareness of Feature Interpretation

- Importance of Feature Binarization

- Familiarity with Data Types

- Awareness of Data Patterns

- Experience with Categorical Data

What’s Next?

After gaining insights into Scikit-Learn binarizers, you’ll likely find these topics advantageous for advancing your understanding of data preprocessing and machine learning:

- Feature Engineering Techniques

- Handling Missing Data

- Feature Selection and Dimensionality Reduction

- Model Evaluation and Selection

- Ensemble Learning Methods

- Advanced Machine Learning Algorithms

- Time Series Analysis and Forecasting

- Natural Language Processing (NLP)

- Deep Learning and Neural Networks

- Machine Learning in Real-world Applications

Exploring these topics will help you broaden your knowledge in machine learning and equip you to tackle a wider range of challenges and projects.

Relevant Entities

| Entity | Properties |

|---|---|

| Binarizer | Transforms numerical features into binary values based on a threshold. Useful for creating binary features. Helps focus on specific aspects of a feature. |

| Threshold | Value used to determine the binary conversion. Features above the threshold become 1; below become 0. Can be set based on domain knowledge or experimentation. |

| fit | Method to compute parameters for binarization. Used for fitting the binarizer on training data. Calculates necessary values for the binarization process. |

| transform | Method to apply binarization to new data. Used for transforming test or validation data. Applies the learned binarization parameters to new samples. |

Conclusion

Scikit-Learn preprocessing binarizers provide a straightforward way to transform numerical features into binary values. Understanding the applications, benefits, and considerations of binarization equips data scientists with another powerful tool for data preprocessing, ultimately contributing to the success of machine learning models.