Scikit-Learn’s KBinsDiscretizer is a powerful tool in the realm of machine learning that allows you to discretize continuous data into intervals. Let’s delve into the key aspects of this preprocessing technique.

What is KBinsDiscretizer?

KBinsDiscretizer is a preprocessing technique that discretizes continuous features into discrete bins or intervals. It divides the range of each feature into a specified number of bins, allowing you to convert numerical data into categorical-like values.

How Does KBinsDiscretizer Work?

It works by taking continuous numerical features and dividing them into predefined bins. You can set the number of bins, the strategy for binning, and whether to encode the bins as one-hot vectors. This is particularly useful when working with algorithms that perform better with discrete features.

When to Use KBinsDiscretizer?

KBinsDiscretizer is particularly useful when you have continuous features and want to transform them into a format that’s better suited for certain machine learning algorithms. For example, decision trees and random forests often benefit from categorical-like features rather than continuous ones.

How to Use KBinsDiscretizer?

Using KBinsDiscretizer is quite straightforward. You create an instance of the class, set the desired parameters such as the number of bins and the binning strategy, and then fit and transform your data. The transformed data will have the continuous features discretized into bins.

What Are the Parameters?

The key parameters of KBinsDiscretizer include:

- n_bins: The number of bins to create.

- encode: Whether to encode the bins using one-hot encoding.

- strategy: The binning strategy, such as ‘uniform’, ‘quantile’, or ‘kmeans’.

Why Use KBinsDiscretizer?

Using KBinsDiscretizer can lead to improved performance of machine learning algorithms, especially when dealing with models that assume categorical features. It can help capture non-linear relationships between features and the target variable.

Python Code Examples

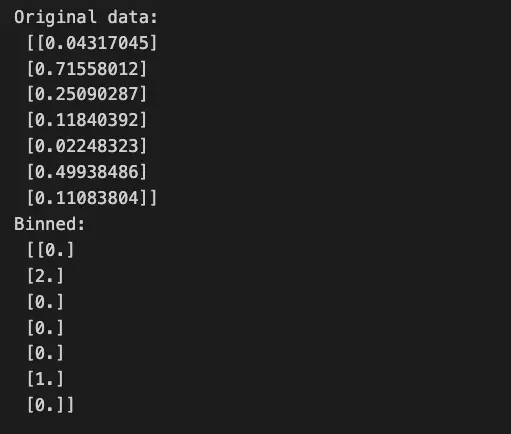

Example 1: Using KBinsDiscretizer to Bin Continuous Features

from sklearn.preprocessing import KBinsDiscretizer

import numpy as np

#Generate random data

data = np.random.rand(100, 1)

#Initialize KBinsDiscretizer

n_bins = 3

encoder = KBinsDiscretizer(n_bins=n_bins, encode='ordinal', strategy='uniform')

#Transform data

binned_data = encoder.fit_transform(data)

print('Original data:\n',data[:7])

print('Binned:\n',binned_data[:7])

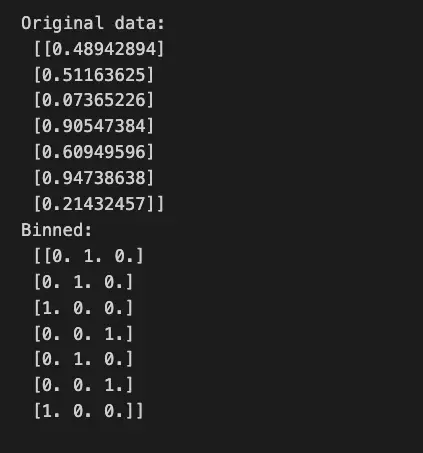

Example 2: Binning and One-Hot Encoding

from sklearn.preprocessing import KBinsDiscretizer

import numpy as np

#Generate random data

data = np.random.rand(100, 1)

#Initialize KBinsDiscretizer

n_bins = 3

encoder = KBinsDiscretizer(n_bins=n_bins, encode='onehot', strategy='uniform')

#Transform data

binned_data = encoder.fit_transform(data)

print('Original data:\n',data[:7])

print('Binned:\n',binned_data.toarray()[:7])

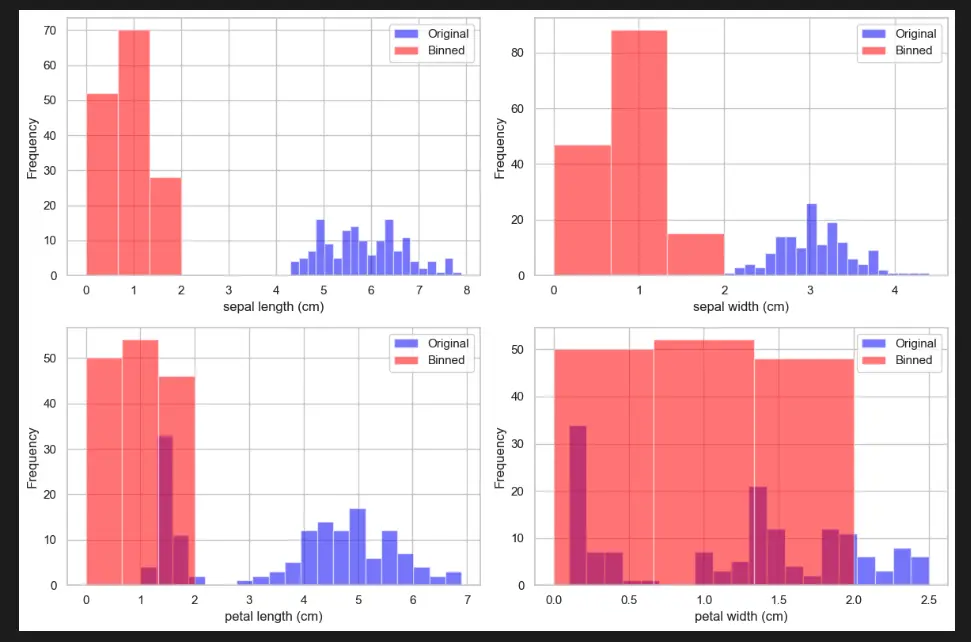

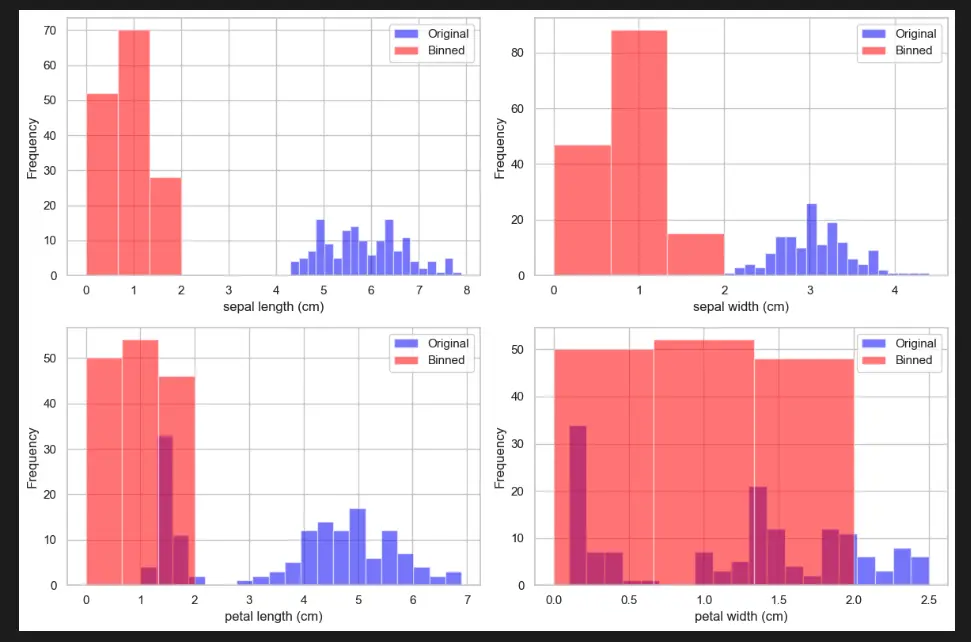

Visualize KBinsDiscretizer with Python

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import load_iris

from sklearn.preprocessing import KBinsDiscretizer

# Load the Iris dataset

iris = load_iris()

X = iris.data

feature_names = iris.feature_names

# Create a KBinsDiscretizer instance

n_bins = 3

enc = KBinsDiscretizer(n_bins=n_bins, encode='ordinal', strategy='uniform')

X_binned = enc.fit_transform(X)

# Plot original and discretized data

plt.figure(figsize=(12, 8))

for feature_idx, feature_name in enumerate(feature_names):

plt.subplot(2, 2, feature_idx + 1)

plt.hist(X[:, feature_idx], bins=20, color='blue', alpha=0.5, label='Original')

plt.hist(X_binned[:, feature_idx], bins=n_bins, color='red', alpha=0.5, label='Binned')

plt.xlabel(feature_name)

plt.ylabel('Frequency')

plt.legend()

plt.tight_layout()

plt.show()

Important Concepts in Scikit-Learn Preprocessing KBinsDiscretizer

- Data Preprocessing

- Feature Binning

- Bin Width and Number of Bins

- Discretization Strategies

- Encode and Transformation Options

To Know Before You Learn Scikit-Learn Preprocessing KBinsDiscretizer?

- Basic understanding of data preprocessing techniques

- Familiarity with feature engineering concepts

- Knowledge of continuous and discrete data

- Understanding of binning and discretization methods

- Awareness of feature transformation and scaling

- Familiarity with Scikit-Learn library and its usage

What’s Next?

- Understanding more advanced feature engineering techniques

- Exploring other preprocessing methods for different types of data

- Learning about feature scaling and normalization

- Studying dimensionality reduction techniques

- Exploring different algorithms and models in machine learning

- Practicing with real-world datasets to apply preprocessing techniques

Relevant Entities

| Entity | Properties |

|---|---|

| KBinsDiscretizer | Discretizes continuous features. Divides features into specified bins. Allows setting the number of bins. Can encode bins using one-hot encoding. Supports different binning strategies. |

| Continuous Features | Numerical features with a range of values. Used as input for KBinsDiscretizer. |

| Bins | Intervals that continuous features are divided into. Number of bins can be specified. Defines the granularity of discretization. |

| Encoding | Process of representing bins as numerical values. One-hot encoding can be applied. Useful for algorithms that require discrete features. |

| Binning Strategies | Different approaches to dividing features into bins. Uniform, quantile, and k-means strategies. Strategy choice affects discretization result. |

| Machine Learning Algorithms | Models used for various tasks in machine learning. May perform better with discrete features. KBinsDiscretizer enhances compatibility. |

Sources:

- scikit-learn.org/stable/modules/generated/sklearn.preprocessing.KBinsDiscretizer.html" target="_blank" rel="noreferrer noopener">Scikit-Learn Documentation

- scikit-learn+preprocessing+kbinsdiscretizer" target="_blank" rel="noreferrer noopener">Stack Overflow

- discretization-transforms-for-machine-learning/" target="_blank" rel="noreferrer noopener">Machine Learning Mastery

- binning-eeea240b15e8" target="_blank" rel="noreferrer noopener">Towards Data Science

- Real Python

Conclusion

KBinsDiscretizer offers a simple yet effective way to transform continuous numerical features into categorical-like values, making them suitable for various machine learning algorithms. By understanding its functionality and parameters, you can make informed decisions on when and how to apply this preprocessing technique to enhance your machine learning workflows.