Feature Encoding helps to convert categorical data to numerical values in a way that can be used in machine learning.

Machine learning algorithms require numerical input data to perform any meaningful analysis.

However, real-world datasets often include categorical features, such as gender or product type, that cannot be directly used by machine learning algorithms.

Feature encoding is the feature transformation process that converts categorical data into numerical values.

In this article, we will explore the concept of feature encoding, its importance in machine learning, and some popular encoding techniques.

What is Feature Encoding?

Feature encoding is the process of converting categorical data into numerical values that machine learning algorithms can understand. In machine learning, a feature refers to any input variable used to train a model.

Categorical features are those that take on a finite number of distinct values. For example, gender can take on only two possible values: male or female.

Product type could be a categorical feature in a dataset with various types of products, such as clothing, electronics, or books.

Why is Feature Encoding Important?

Most machine learning algorithms use mathematical equations to make predictions. Categorical data cannot be directly used by these equations.

To use categorical data in machine learning algorithms, it needs to be transformed into numerical values.

Additionally, encoding helps to reduce the dimensionality of data by converting categorical data into a set of numerical features, which can lead to better model performance.

Popular Feature Encoding Techniques

Popular feature encoding techniques that can be used in machine learning projects are:

- One-Hot Encoding

- Label Encoding

- Ordinal Encoding

- Count Encoding

- Target Encoding

One-Hot Encoding

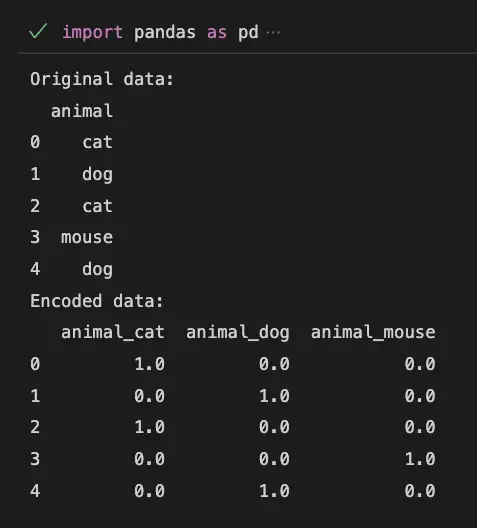

When preprocessing in Scikit-learn, OneHotEncoder is one of the most popular feature encoding techniques used. It converts categorical data into a binary vector of zeros and ones. Each category becomes a new feature, and if the data belongs to that category, it is assigned a value of one; otherwise, it is assigned a value of zero.

One-Hot Encoding Python Example

Here is an example of what OneHotEncoder can do to your data.

from sklearn.preprocessing import OneHotEncoder

encoder = OneHotEncoder()

encoded_data = encoder.fit_transform(data)import pandas as pd

from sklearn.preprocessing import OneHotEncoder

# create a toy dataset

data = {'animal': ['cat', 'dog', 'cat', 'mouse', 'dog']}

df = pd.DataFrame(data)

# initialize a OneHotEncoder

encoder = OneHotEncoder()

# fit and transform the data

encoded_data = encoder.fit_transform(df)

# convert the encoded data to a pandas DataFrame

encoded_df = pd.DataFrame(encoded_data.toarray(), columns=encoder.get_feature_names(['animal']))

# plot the original data

print('Original data:')

print(df)

# plot the encoded data

print('Encoded data:')

print(encoded_df)The result is an encoded Pandas DataFrame that can be used for training.

Label Encoding

Label encoding is a simple technique that converts categorical data into integer values. Each category is assigned a unique integer value, and these integer values are used as the new feature.

Label Encoding Python Example

In this example, we will show how to use sklearn.preprocessing.LabelEncoder to perform label encoding in Scikit-learn.

from sklearn.preprocessing import LabelEncoder

encoder = LabelEncoder()

encoded_data = encoder.fit_transform(data)Ordinal Encoding

Ordinal encoding is similar to label encoding, but it is used when the categorical data has an inherent order. For example, a dataset with ratings ranging from one to five can be converted to integer values ranging from one to five using ordinal encoding.

Count Encoding

Count encoding is a technique that replaces each category with the count of its occurrences in the dataset.

Target Encoding

Target encoding is a technique that replaces each category with the mean of the target variable for that category. It is often used in classification problems.

Useful Python Libraries for Feature encoding

- scikit-learn: OneHotEncoder, LabelEncoder, OrdinalEncoder, CountEncoder, TargetEncoder

- category-encoders: BinaryEncoder, CountEncoder, TargetEncoder, OrdinalEncoder, HashingEncoder

- pandas: get_dummies, factorize, replace

Datasets useful for Feature encoding

Titanic

from sklearn.datasets import fetch_openml

titanic = fetch_openml(name='titanic', version=1)

data = titanic.data

target = titanic.target

Adult Census Income

import pandas as pd

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.data"

names = ['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status',

'occupation', 'relationship', 'race', 'sex', 'capital-gain', 'capital-loss',

'hours-per-week', 'native-country', 'income']

df = pd.read_csv(url, header=None, names=names)

data = df.drop('income', axis=1)

target = df.income

Important Concepts in Feature encoding

- Categorical data

- Ordinal data

- One-hot encoding

- Label encoding

- Target encoding

- Count encoding

Before Learning Feature encoding

- Python programming language

- Basic machine learning concepts

- Statistical concepts

- Data preprocessing techniques

- Familiarity with different data types

What’s Next?

- Feature scaling

- Feature selection

- Dimensionality reduction

- Regularization techniques

- Model selection and hyperparameter tuning

Relevant entities

| Entity | Properties |

|---|---|

| Categorical feature | Takes on a finite number of distinct values |

| Numerical feature | Takes on continuous or discrete numerical values |

| One-hot encoding | Converts categorical data into a binary vector of zeros and ones |

| Label encoding | Converts categorical data into integer values |

| Ordinal encoding | Converts categorical data with inherent order into integer values |

| Count encoding | Replaces each category with the count of its occurrences in the dataset |

| Target encoding | Replaces each category with the mean of the target variable for that category |

Frequently asked questions

What is feature encoding?

Why is feature encoding important?

What are some popular encoding techniques?

What is one-hot encoding?

What is label encoding?

What is ordinal encoding?

Conclusion

Feature encoding is a crucial step in the machine learning pipeline, as it transforms categorical data into numerical values that can be used by machine learning models.

There are several popular feature encoding techniques, including one-hot encoding, label encoding, ordinal encoding, count encoding, and target encoding, each with its strengths and weaknesses.

Choosing the right encoding technique depends on the specific dataset and the machine learning task at hand.

Sources:- Feature encoding – Wikipedia

- All about Categorical Variable Encoding

- Encoding Categorical Features

- Types of Categorical Data Encoding

- scikit-learn.org/stable/modules/preprocessing.html">Preprocessing data – scikit-learn