Machine learning relies heavily on data preprocessing to ensure accurate and reliable model performance. Scikit-Learn provides a powerful set of preprocessing transformers to manipulate and transform your data before feeding it into machine learning algorithms. In this article, we’ll explore some important preprocessing transformers in Scikit-Learn.

PowerTransformer

What is PowerTransformer?

PowerTransformer is a preprocessing transformer that applies power transformations to make data more Gaussian-like, which can improve the performance of certain machine learning algorithms.

Why Use PowerTransformer?

- Corrects skewed distributions and reduces the impact of outliers.

- Enhances the performance of algorithms that assume Gaussian distribution.

QuantileTransformer

What is QuantileTransformer?

QuantileTransformer is a preprocessing transformer that transforms features to have a uniform or Gaussian distribution.

Why Use QuantileTransformer?

- Helps normalize features and improve model performance.

- Can be useful when dealing with non-normal data distributions.

SplineTransformer

What is SplineTransformer?

SplineTransformer is a preprocessing transformer that applies cubic spline interpolation to transform features.

Why Use SplineTransformer?

- Can be effective when dealing with non-linear relationships between features.

- Helps capture complex interactions and patterns in the data.

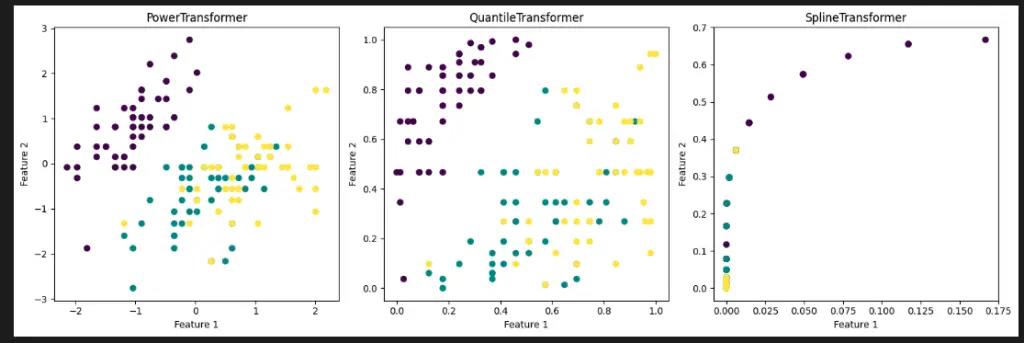

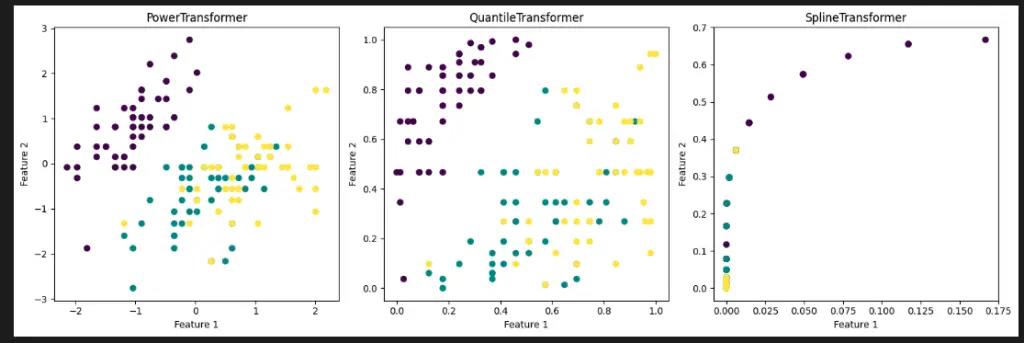

Comparison: PowerTransformer vs. QuantileTransformer vs. SplineTransformer

PowerTransformer vs. QuantileTransformer:

- PowerTransformer aims to make data more Gaussian-like, while QuantileTransformer aims to normalize features.

- PowerTransformer is sensitive to outliers, whereas QuantileTransformer is less sensitive due to rank-based transformation.

QuantileTransformer vs. SplineTransformer:

- QuantileTransformer focuses on reshaping the distribution of features, while SplineTransformer captures non-linear relationships.

- SplineTransformer may be more appropriate when non-linearities are present in the data.

Python Example with Sklearn Transformers

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.preprocessing import PowerTransformer, QuantileTransformer, SplineTransformer

# Load a dataset (for example, the Iris dataset)

iris = load_iris()

X = iris.data

# Initialize instances of the transformers:

power_transformer = PowerTransformer()

quantile_transformer = QuantileTransformer()

spline_transformer = SplineTransformer()

# Fit and transform the data using each transformer

X_power = power_transformer.fit_transform(X)

X_quantile = quantile_transformer.fit_transform(X)

X_spline = spline_transformer.fit_transform(X)

fig, axes = plt.subplots(1, 3, figsize=(15, 5))

# Plot using PowerTransformer

axes[0].scatter(X_power[:, 0], X_power[:, 1], c=iris.target)

axes[0].set_title("PowerTransformer")

axes[0].set_xlabel("Feature 1")

axes[0].set_ylabel("Feature 2")

# Plot using QuantileTransformer

axes[1].scatter(X_quantile[:, 0], X_quantile[:, 1], c=iris.target)

axes[1].set_title("QuantileTransformer")

axes[1].set_xlabel("Feature 1")

axes[1].set_ylabel("Feature 2")

# Plot using SplineTransformer

axes[2].scatter(X_spline[:, 0], X_spline[:, 1], c=iris.target)

axes[2].set_title("SplineTransformer")

axes[2].set_xlabel("Feature 1")

axes[2].set_ylabel("Feature 2")

plt.tight_layout()

plt.show()

Relevant entities

| Entity | Properties |

|---|---|

PowerTransformer | Applies power transformations to make data more Gaussian-like. |

QuantileTransformer | Transforms features to have a uniform or Gaussian distribution. |

SplineTransformer | Applies cubic spline interpolation to transform features. |

Conclusion

Scikit-Learn preprocessing transformers provide essential tools to preprocess and transform data before using it for machine learning tasks. Whether you need to handle skewed data, normalize distributions, or capture non-linear relationships, Scikit-Learn has a variety of transformers to suit your needs. By applying the right preprocessing techniques, you can enhance the performance and reliability of your machine learning models.