Scikit-Learn Preprocessing KernelCenterer is a crucial tool in the field of machine learning that plays a role in centering an arbitrary kernel matrix. Let’s explore this concept and understand its significance.

What is KernelCenterer?

KernelCenterer is a preprocessing technique in Scikit-Learn that focuses on centering an arbitrary kernel matrix K. This operation involves subtracting the mean of the kernel matrix from each element, resulting in a centered kernel matrix with zero mean.

Why Center a Kernel Matrix?

Centering a kernel matrix is important to ensure that the data’s features have a consistent reference point, which can enhance the performance of various machine learning algorithms. By centering the kernel matrix, we can remove any biases introduced by the original data distribution and make the learning process more effective.

How Does KernelCenterer Work?

The KernelCenterer works by computing the mean of the input kernel matrix K and then subtracting this mean from each element of the matrix. This process shifts the distribution of the kernel matrix’s values, making its mean zero and effectively centering it around the origin. This centered kernel matrix can then be used in various machine learning algorithms.

When to Use KernelCenterer?

KernelCenterer is particularly useful when dealing with kernel-based machine learning algorithms, such as Support Vector Machines (SVMs) and kernelized versions of Principal Component Analysis (PCA). These algorithms heavily rely on the kernel matrix, and centering it can improve the interpretability and performance of these models.

Benefits of KernelCenterer

- Improves the performance of kernel-based algorithms.

- Enhances interpretability by removing bias from kernel matrix.

- Helps algorithms converge faster by reducing sensitivity to data distribution.

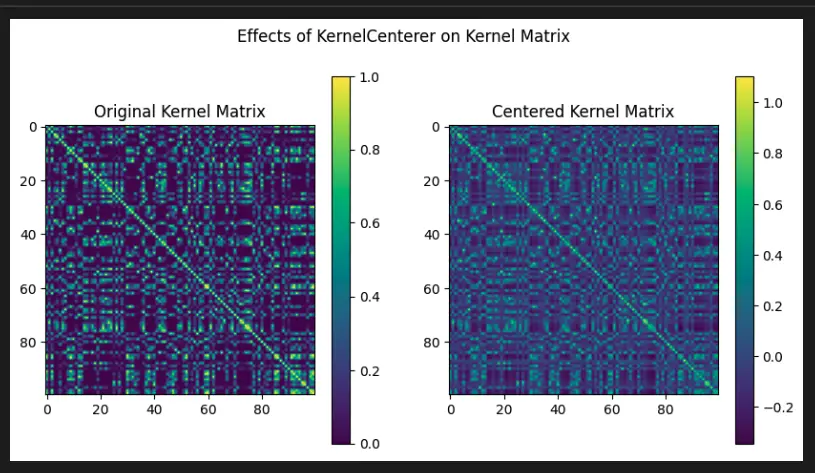

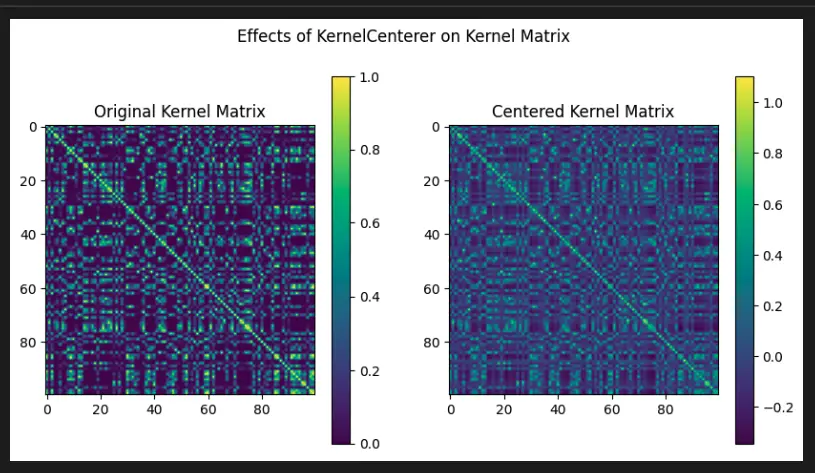

Visualize KernelCenterer with Python

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics.pairwise import rbf_kernel

from sklearn.preprocessing import KernelCenterer

# Generate a synthetic dataset

X, _ = make_blobs(n_samples=100, n_features=2, centers=2, random_state=42)

# Compute the RBF kernel matrix

kernel_matrix = rbf_kernel(X)

# Apply KernelCenterer to center the kernel matrix

centerer = KernelCenterer()

centered_kernel_matrix = centerer.fit_transform(kernel_matrix)

# Create subplots for original and centered kernel matrices

fig, axs = plt.subplots(1, 2, figsize=(10, 5))

# Plot original kernel matrix

im1 = axs[0].imshow(kernel_matrix, cmap='viridis')

axs[0].set_title('Original Kernel Matrix')

plt.colorbar(im1, ax=axs[0])

# Plot centered kernel matrix

im2 = axs[1].imshow(centered_kernel_matrix, cmap='viridis')

axs[1].set_title('Centered Kernel Matrix')

plt.colorbar(im2, ax=axs[1])

# Set overall title

plt.suptitle('Effects of KernelCenterer on Kernel Matrix')

# Show the plots

plt.show()

Important Concepts in Scikit-Learn Preprocessing KernelCenterer

- Kernel Methods in Machine Learning

- Feature Centering

- Kernel Functions

- Kernel Trick

- Support Vector Machines (SVM)

To Know Before You Learn Scikit-Learn Preprocessing KernelCenterer

- Understanding Linear Algebra

- Familiarity with Feature Scaling

- Basic Knowledge of Support Vector Machines (SVM)

- Understanding Kernel Methods and Kernel Functions

- Awareness of Preprocessing Techniques in Machine Learning

What’s Next?

After learning about Scikit-Learn Preprocessing KernelCenterer, you can delve into more advanced topics related to preprocessing and feature engineering in machine learning. Here are some topics that are commonly taught next:

- Principal Component Analysis (PCA) for Dimensionality Reduction

- Feature Selection Techniques

- Advanced Kernel Methods and Support Vector Machines (SVM)

- Ensemble Methods and Model Stacking

- Hyperparameter Tuning and Model Evaluation

Relevant entities

| Entity | Properties |

|---|---|

| KernelCenterer | Center an arbitrary kernel matrix K by subtracting mean from elements. |

| Kernel Matrix | Matrix representing the similarity or inner product of data points in a higher-dimensional space. |

| Machine Learning Algorithms | Models that learn patterns from data to make predictions or decisions. |

| Support Vector Machines (SVMs) | Supervised learning models used for classification and regression analysis. |

| Principal Component Analysis (PCA) | Dimensionality reduction technique used to transform data into a lower-dimensional space. |

| Centering | Shifting data or matrix to have a zero mean, reducing bias and improving model performance. |

Sources:

- scikit-learn.org/stable/modules/generated/sklearn.preprocessing.KernelCenterer.html" target="_blank" rel="noreferrer noopener">Scikit-Learn Documentation on KernelCenterer

- kernelcenterer-work-in-scikit-learn" target="_blank" rel="noreferrer noopener">Stack Overflow: How does the KernelCenterer work in scikit-learn?

- Data Science Stack Exchange: Why is KernelCenterer not needed in the pipeline?

- Machine Learning Mastery: Kernel Centering for Machine Learning

Conclusion

Scikit-Learn Preprocessing KernelCenterer is a valuable technique that aids in centering kernel matrices, thereby improving the performance and interpretability of kernel-based machine learning algorithms. By understanding and applying KernelCenterer, machine learning practitioners can enhance their models’ effectiveness and ensure better convergence in their applications.