One important technique in machine learning is polynomial transformation, a feature transformation technique that allows us to model nonlinear relationships between variables using polynomial features. In this article, we will explore what polynomial transformation is, why it is useful, and how it can be applied in machine learning.

What is Polynomial Transformation?

Polynomial transformation is a process of creating polynomial features from existing features in a dataset. Polynomial features are created by taking the powers of existing features up to a certain degree. For example, if we have a dataset with two features x and y, we can create polynomial features up to degree 2 by taking x^2, y^2, and xy. These polynomial features can then be used as inputs for a machine learning algorithm to model nonlinear relationships between the features.

Why is Polynomial Transformation Useful?

Polynomial transformation is useful because it allows us to model nonlinear relationships between variables using linear models. Linear models, such as linear regression, assume a linear relationship between the independent variables and the dependent variable. However, many real-world problems involve nonlinear relationships between variables, such as quadratic or cubic relationships. By creating polynomial features, we can transform the data to fit a linear model, allowing us to make accurate predictions even when the underlying relationship is nonlinear.

How to Apply Polynomial Transformation in Machine Learning

To apply polynomial transformation in machine learning, we first need to create polynomial features from the existing features in our dataset. This can be done using the PolynomialFeatures class in scikit-learn, a popular machine learning library in Python. The PolynomialFeatures class takes as input the degree of the polynomial features we want to create, and then transforms the data accordingly.

Once we have created the polynomial features, we can use them as inputs for a linear model, such as linear regression. We can also use more advanced machine learning algorithms, such as support vector machines or neural networks, to model nonlinear relationships between the features.

Limitations of Polynomial Transformation

While polynomial transformation is a powerful technique for modeling nonlinear relationships in machine learning, it is not without its limitations. One of the main limitations is the risk of overfitting, where the model fits too closely to the training data and fails to generalize to new data. This can be mitigated by using techniques such as cross-validation and regularization.

Another limitation of polynomial transformation is the curse of dimensionality, where the number of features in the dataset grows exponentially with the degree of the polynomial features. This can make the dataset very large and difficult to work with, especially if the number of observations is limited.

Python Code Examples

Using PolynomialFeatures from Scikit-Learn

from sklearn.preprocessing import PolynomialFeatures

import numpy as np

# create sample dataset

X = np.array([[0.5, 2], [1.5, 3]])

# create polynomial features with degree 2

poly = PolynomialFeatures(degree=2)

X_poly = poly.fit_transform(X)

print(X_poly)

[[1. 0.5 2. 0.25 1. 4. ]

[1. 1.5 3. 2.25 4.5 9. ]]Visualize Polynomial Transformation

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.datasets import load_boston

from sklearn.preprocessing import PolynomialFeatures

# load boston dataset from scikit-learn

boston = load_boston()

X = boston.data[:, 5:6] # select one feature

y = boston.target

# perform polynomial transformation

poly = PolynomialFeatures(degree=2)

X_poly = poly.fit_transform(X)

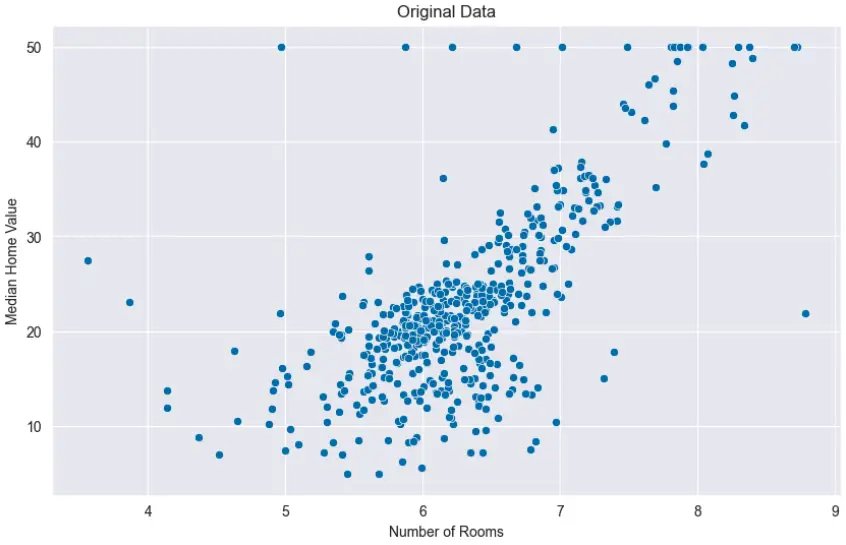

# plot the original data

plt.figure(figsize=(10,6))

sns.scatterplot(x=X[:,0], y=y)

plt.title('Original Data')

plt.xlabel('Number of Rooms')

plt.ylabel('Median Home Value')

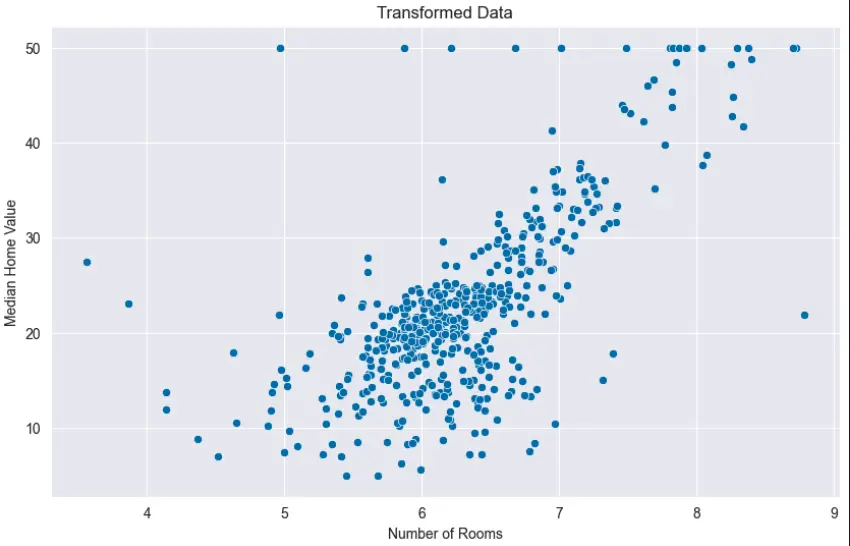

# plot the transformed data

plt.figure(figsize=(10,6))

sns.scatterplot(x=X_poly[:,1], y=y)

plt.title('Transformed Data')

plt.xlabel('Number of Rooms')

plt.ylabel('Median Home Value')

plt.show()

In this code example, we first load the Boston dataset from scikit-learn and select one feature to perform polynomial transformation on. We then use the PolynomialFeatures function from scikit-learn to perform a second-degree polynomial transformation on the selected feature. Finally, we use the Seaborn library to create scatterplots of both the original and transformed data to visualize the effect of polynomial transformation.

Useful Python Libraries for Polynomial transformation

- scikit-learn: PolynomialFeatures()

- numpy: poly1d()

- matplotlib: plot()

Datasets useful for Polynomial transformation

California Housing Dataset

from sklearn.datasets import fetch_california_housing

cali = fetch_california_housing()

X = cali.data

y = cali.target

To Know Before You Learn Polynomial transformation

- Basic knowledge of linear regression

- Understanding of feature engineering

- Familiarity with polynomial functions and their properties

- Experience with Python programming language and related libraries such as NumPy, Pandas, and Scikit-learn

Important Concepts in Polynomial transformation

- Linear regression

- Feature engineering

- Overfitting and underfitting

- Bias-variance tradeoff

- Regularization

What’s Next?

- Regularization techniques in linear regression

- Feature selection and dimensionality reduction

- Non-linear regression models such as decision trees, random forests, and neural networks

Relevant Entities

| Entity | Properties |

|---|---|

| Polynomial features | Created by taking powers of existing features up to a certain degree |

| Linear models | Assume a linear relationship between independent and dependent variables |

| Nonlinear relationships | Exist between variables in many real-world problems |

| Overfitting | Risk of fitting the model too closely to the training data and failing to generalize to new data |

| Curse of dimensionality | The number of features in the dataset grows exponentially with the degree of the polynomial features |

| Scikit-learn | A popular machine learning library in Python that provides the PolynomialFeatures class for creating polynomial features |

| Polynomial Transformation | Process of creating polynomial features from existing features in a dataset to model nonlinear relationships between variables using linear models |

Frequently Asked Questions

1. What is polynomial transformation?

Process of creating polynomial features from existing features in a dataset.

2. What are polynomial features?

Features created by taking powers of existing features up to a certain degree.

3. Why do we need polynomial transformation?

To model nonlinear relationships between variables using linear models.

4. What is the risk of overfitting with polynomial features?

Risk of fitting the model too closely to the training data and failing to generalize to new data.

5. What is the curse of dimensionality with polynomial features?

The number of features in the dataset grows exponentially with the degree of the polynomial features.

6. What library provides PolynomialFeatures class for creating polynomial features?

Scikit-learn, a popular machine learning library in Python.

Conclusion

Polynomial transformation is a powerful technique in machine learning that allows us to model nonlinear relationships between variables using linear models. By creating polynomial features, we can transform the data to fit a linear model, allowing us to make accurate predictions even when the underlying relationship is nonlinear. While there are limitations to polynomial transformation, such as overfitting and the curse of dimensionality, it remains a useful tool in the machine learning toolbox.

Sources

- “Polynomial Regression.” GeeksforGeeks, https://www.geeksforgeeks.org/polynomial-regression/.

- “Polynomial Regression.” Scikit-learn, https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.PolynomialFeatures.html.

- “Polynomial Regression.” Towards Data Science, https://towardsdatascience.com/polynomial-regression-bbe8b9d97491.

- “Polynomial Regression.” Machine Learning Mastery, https://machinelearningmastery.com/polynomial-regression-with-python/.